The Lost Dao

The Lost Dao

(D)ecisions about the development and exploitation of computer technology must be made not only "in the public interest" but in the interest of giving the public itself the means to enter into the decision-making processes that will shape their future. — J. C. R. Licklider, "Computers and Government", 1980[1]

Can a ⿻ understanding of society lay the foundation for social transformations as dramatic as those that fields like quantum mechanics and ecology have brought to natural sciences, physical technology and our relationship to nature? Liberal democracies often celebrate themselves as pluralistic societies, which would seem to indicate they have already drawn the available lessons from ⿻ social science. Yet despite this formal commitment to pluralism and democracy, almost every country has been forced by the limits of available information systems to homogenize and simplify social institutions in a monist atomist mold that runs into direct conflict with such values. The great hope of ⿻ social science and ⿻ built on top of it is to use the potential of information technology to begin to overcome these limitations.

⿻ launches

This was the mission pursued by the younger generation that followed in Wiener's lead but had a more human/social scientific background. This generation included a range of pioneers of applied cybernetics such as the anthropologist Margaret Mead[2] (who heavily influenced the aesthetics of the internet), W. Edwards Deming[3] (whose influence on Japanese and to a lesser extent Taiwanese inclusive industrial quality practices we saw above) and Stafford Beer[4] (who pioneered business cybernetics and has become something of a guru for social applications of Wiener's ideas including in Chile's brief cybernetic socialist regime of the early 1970s). They built on his vision in a more pragmatic mode, shaping technologies that defined the information era. Yet the most ambitious and systemic impact of this work was heralded by a blip moving across the sky in October 1957, a story masterfully narrated by M. Mitchell Waldrop in his The Dream Machine, from which much of what follows derives.[5]

Sputnik and the Advanced Research Projects Agency

The launch by the Soviet Union of the first orbital satellite was followed a month later by the Gaither Committee report, claiming that the US had fallen behind the Soviets in missile production. The ensuing moral panic forced the Eisenhower administration into emergency action to reassure the public of American strategic superiority. Yet despite, or perhaps because of, his own martial background, Eisenhower deeply distrusted what he labeled America's "military industrial complex", while having boundless admiration for scientists.[6] He thus aimed to channel the passions of the Cold War into a national strategy to improve scientific research and education.[7]

While that strategy had many prongs, a central one was the establishment, within the Department of Defense, of a quasi-independent, scientifically administered Advanced Research Projects Agency (ARPA) that would harness expertise from universities to accelerate ambitious and potentially transformative scientific projects with potential defense applications.

While ARPA began with many aims, some of which were soon assigned to other newly formed agencies, such as the National Aeronautics and Space Administration (NASA), it quickly found a niche as the most ambitious government supporter of ambitious and "far out" projects under its second director, Jack Ruina. One area was to prove particularly representative of this risk-taking style: the Information Processing Techniques Office led by Joseph Carl Robnett (JCR) Licklider.

Licklider hailed from a different field still from the political economy of George, sociology of Simmel, political philosophy of Dewey and mathematics of Wiener: "Lick", as he was commonly known, received his PhD in 1942 in the field of psychoacoustics. After spending his early career developing applications to human performance in high-stakes interactions with technology (especially aviation), his attention increasingly turned to the possibility of human interaction with the fastest growing form of machinery: the "computing machine". He joined the Massachusetts Institute of Technology (MIT) to help found Lincoln Laboratory and the psychology program. He moved to the private sector as Vice President of Bolt, Beranek and Newman (BBN), one of the first MIT-spin off research start-ups.

Having persuaded BBN's leadership to shift their attention towards computing devices, Lick began to develop an alternative technological vision to the then-emerging field of Artificial Intelligence that drew on his psychological background to propose "Man-Computer Symbiosis", as his path-breaking 1960 paper was titled. Lick hypothesized that while "in due course...'machines' will outdo the human brain in most of the functions we now consider exclusively within its province...(t)here will...be a fairly long interim during which the main advances will be made by men and computers working together...those years should be intellectually the most creative and exciting in the history of mankind."[8]

These visions turned out to arrive at precisely the right moment for ARPA, as it was in search of bold missions with which it could secure its place in the rapidly coalescing national science administration landscape. Ruina appointed Lick to lead the newly-formed Information Processing Techniques Office (IPTO). Lick harnessed the opportunity to build and shape much of the structure of what became the field of Computer Science.

The Intergalactic Computer Network

While Lick spent only two years at ARPA, they laid the groundwork for much of what followed in the next forty years of the field. He seeded a network of "time sharing" projects around the US that would enable several individual users to directly interact with previously monolithic large-scale computing machines, taking a first step towards the age of personal computing. The five universities thus supported (Stanford, MIT, UC Berkeley, UCLA and Carnegie Mellon) went on to become the core of the academic emerging field of computer science.

Beyond establishing the computational and scientific backbone of modern computing, Lick was particularly focused on the "human factors" in which he specialized. He aimed to make the network represent these ambitions in two ways that paralleled the social and personal aspects of humanity. On the one hand, he gave particular attention and support to projects he believed could bring computing closer to the lives of more people, integrating with the functioning of human minds. The leading example of this was the Augmentation Research Center established by Douglas Engelbart at Stanford.[9] On the other hand, he dubbed the network of collaboration between these hubs, with his usual tongue-in-cheek, the "Intergalactic Computer Network", and hoped it would provide a model of computer-mediated collaboration and co-governance.[10]

This project bore fruit in a variety of ways, both immediately and longer-term. Engelbart quickly invented many foundational elements of personal computing, including the mouse, a bitmapped screen that was a core precursor to the graphical user interface and hypertext; his demonstration of this work, six short years after Lick's initial funding, as the "oNLine system" (NLS) is remembered as "the mother of all demos" and a defining moment in the development of personal computers.[11] This in turn helped persuade Xerox Corporation to establish their Palo Alto Research Center (PARC), which went on to pioneer much of personal computing. US News and World Report lists four of the five departments Lick funded as the top four computer science departments in the country.[12] Most importantly, after Lick's departure to the private sector, the Intergalactic Computer Network developed into something less fanciful and more profound under the leadership of his collaborator, Robert W. Taylor.

A network of networks

Taylor and Lick were naturally colleagues. While Taylor never completed his PhD, his research field was also psychoacoustics and he served as Lick's counterpart at NASA, which had just split from ARPA, during Lick's leadership at IPTO. Shortly following Lick's departure (in 1965), Taylor moved to IPTO to help develop Lick's networking vision under the leadership of Ivan Sutherland, who then returned to academia, leaving Taylor in charge of IPTO and the network that he more modestly labeled the ARPANET. He used his authority to commission Lick's former home of BBN to build the first working prototype of the ARPANET backbone. With momentum growing through Engelbart's demonstration of personal computing and ARPANET's first successful trials, Lick and Taylor articulated their vision for the future possibilities of personal and social computing in their 1968 article "The Computer as a Communication Device", describing much of what would become the culture of personal computing, internet and even smartphones several decades later.[13]

By 1969, Taylor felt the mission of the ARPANET was on track to success and moved on to Xerox PARC, where he led the Computer Science Laboratory in developing much of this vision into working prototypes. These in turn became the core of the modern personal computer that Steve Jobs famously "stole" from Xerox to build the Macintosh, while ARPANET evolved into the modern internet.[14] In short, the technological revolutions of the 1980s and 1990s trace clearly back to this quite small group of innovators in the 1960s. While we will turn to these more broadly-known later developments shortly, it is worth lingering on the core of the research program that made them possible.

At the core of the development of what became the internet was replacing centralized, linear and atomized structures with ⿻ relationships and governance. This happened at three levels that eventually converged in the early 1990s as the World Wide Web:

- packet switching to replace centralized switchboards,

- hypertext to replace linear text,

- and open standard setting processes to replace both government and corporate top-down decision-making

All three ideas had their seeds at the edges of the early community Lick formed and grew into core features of the ARPANET community.

While the concept of networks, redundancy and sharing permeate Lick's original vision, it was Paul Baran's 1964 report "On Distributed Communications" that clearly articulated how and why communications networks should strive for a ⿻ rather than centralized structure.[15]

Baran argued that while centralized switchboards achieved high reliability at low cost under normal conditions, they were fragile to disruptions. Conversely, networks with many centers could be built with cheap and unreliable components and still withstand even quite devastating attacks by "routing around damage", taking a dynamic path through the network based on availability rather than prespecified planning. While Baran received support and encouragement from scientists at Bell Labs, his ideas were roundly dismissed by AT&T, the national telephone monopoly in whose culture high-quality centralized dedicated machinery was deeply entrenched.

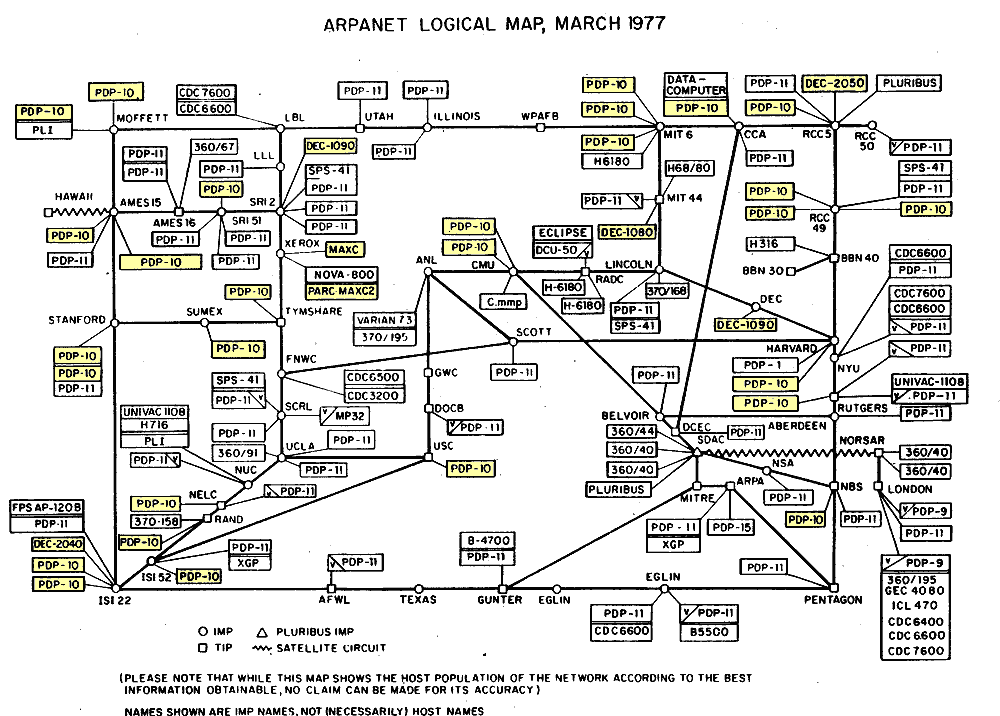

Despite the apparent threat it posed to that private interest, packet switching caught the positive attention of another organization that owed its genesis to the threat of devastating attacks: ARPA. At a 1967 conference, ARPANET's first program manager, Lawrence Roberts, learned of packet switching through a presentation by Donald Davies, who concurrently and independently developed the same idea as Baran, and drew on Baran's arguments that he soon learned of to sell the concept to the team. Figure A shows the decentralized logical structure of early ARPANET that resulted

If one path to networked thinking was thus motivated by technical resilience, another was motivated by creative expression. Ted Nelson trained as a sociologist, was inspired in his work by a visit to campus he hosted in 1959 by cybernetic pioneer Margaret Mead's vision of democratic and pluralistic media and developed into an artist. Following these early experiences, he devoted his life beginning in his early 20s to the development of "Project Xanadu", which aimed to create a revolutionary human-centered interface for computer networks. While Xanadu had so many components that Nelson considered indispensable that it was not released fully until the 2010s, its core idea, co-developed with Engelbart, was "hypertext" as Nelson labeled it.

Nelson imagined hypertext as a way to liberate communication from the tyranny of a linear interpretation imposed by an original author, empowering a "pluralism" (as he labeled it) of paths through material through a network of (bidirectional) links connecting material in a variety of sequences.[16] This "choose your own adventure"[17] quality is most familiar today to internet users in their browsing experiences but showed up earlier in commercial products in the 1980s (such as computer games based on hypercard). Nelson imagined that such ease of navigation and recombination would enable the formation of new cultures and narratives at unprecedented speed and scope. The power of this approach became apparent to the broader world when Tim Berners-Lee made it central to his "World Wide Web" approach to navigation in the early 1990s, ushering in the era of broad adoption of the internet.

While Engelbart and Nelson were lifelong friends and shared many similar visions, they took very different paths to realizing them, each of which (as we will see) held an important seed of truth. Engelbart, while also a visionary, was a consummate pragmatist and a smooth political operator, and went on to be recognized as the pioneer of personal computing. Nelson was an artistic purist whose relentless pursuit over decades of Xanadu embodying all of his seventeen enumerated principles buried his career.

As an active participant in Lick's network, Engelbart conversely tempered his ambition with the need to persuade other network nodes to support, adopt or at least inter-operate with his approach. As different user interfaces and networking protocols proliferated, retreated from the pursuit of perfection. Engelbart, and even more his colleagues across the project, instead began to develop a culture of collegiality, facilitated by the communication network the were building, across the often competing universities they worked at. The physical separation made tight coordination of networks impossible, but work to ensure minimal inter-operation and spreading of clear best practices became a core characteristic of the ARPANET community.

This culture manifested in the development of the "Request for Comments" (RFC) process by Steve Crocker, arguably one of the first "wiki"-like processes of informal and mostly additive collaboration across many geographically and sectorally (governmental, corporate, university) dispersed collaborators. This in turn contributed to the common Network Control Protocol and, eventually, Transmission Control and Internet Protocols (TCP/IP) under the famously mission-driven but inclusive and responsive leadership of Vint Cerf and Bob Kahn between 1974 when TCP was first circulated as RFC 675 and 1983 when they became the official ARPANET protocols. At the core of the approach was the vision of a "network of networks" that gave the "internet" its name: that many diverse and local networks (at universities, corporations and government agencies) could inter-operate sufficiently to permit the near-seamless communication across long distances, in contrast to centralized networks (such as France's concurrent Minitel) that were standardized from the top down by a government.[18] Together these three dimensions of networking (of technical communication protocols, communicative content and governance of standards) converged to create the internet we know today.

Triumph and tragedy

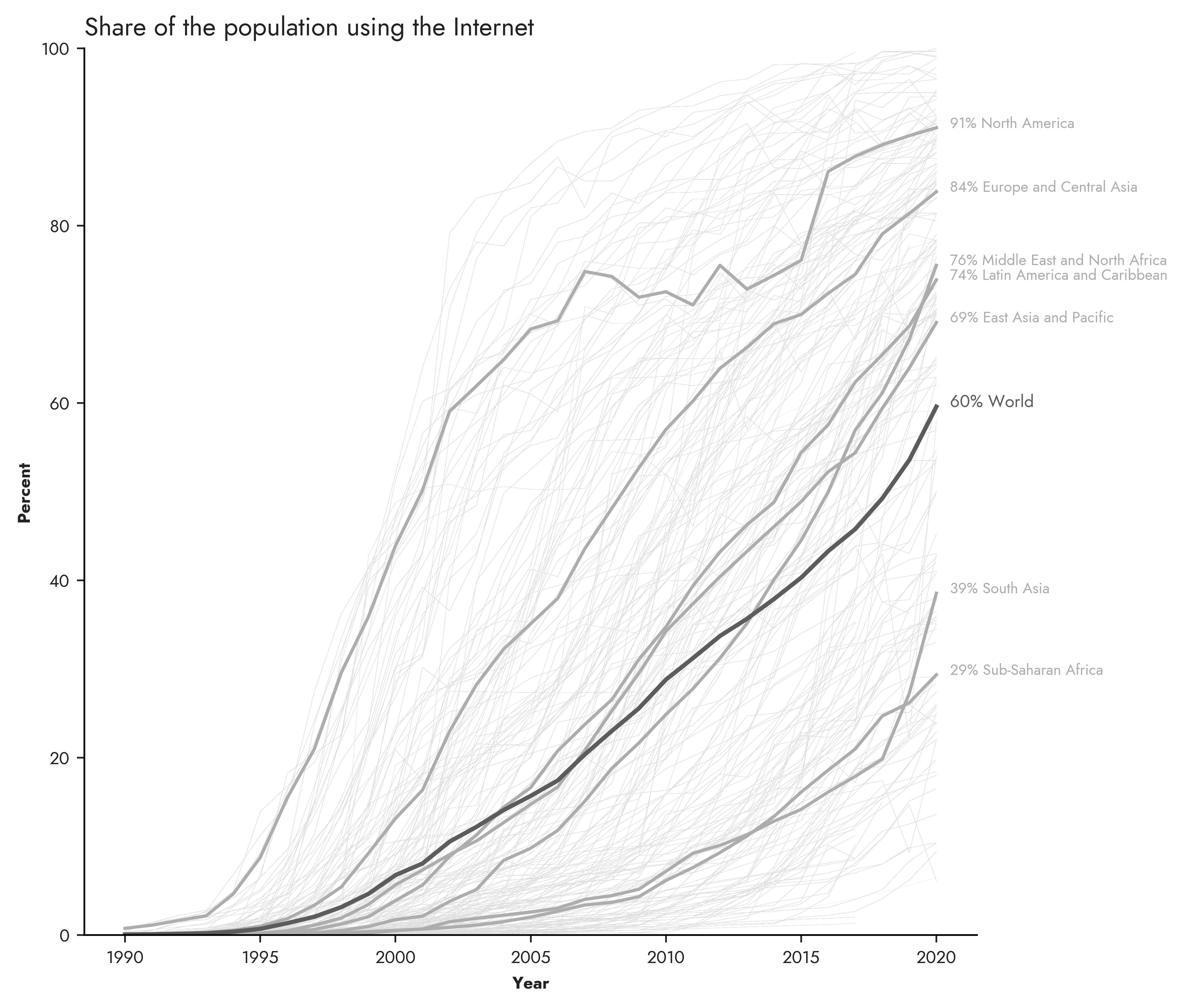

Much of what resulted from this project is so broadly known it hardly bears repeating here. During the 1970's, Taylor's Xerox PARC produced a series of expensive, and thus commercially unsuccessful, but revolutionary "personal workstations" that incorporated much of what became the personal computer of the 1990s. At the same time, as computer components were made available to broader populations, businesses like Apple and Microsoft began to make cheaper and less user-friendly machines available broadly. Struggling to commercialize its inventions, Xerox allowed Apple Co-Founder Steve Jobs access to its technology in exchange for a stake, resulting in the Macintosh's ushering in of modern personal computing and Microsoft's subsequent mass scaling through their Windows operating system. By 2000, a majority of Americans had a personal computer in their homes. Internet use has steadily spread, as pictured in Figure B.

The internet and its discontents

And much as it had developed in parallel from the start, the internet grew to connect those personal computers. During the late 1960s and early 1970s, a variety of networks grew up in parallel to the largest ARPANET, including at universities, governments outside the United States, international standards bodies and inside corporations like BBN and Xerox. Under the leadership of Kahn and Cerf and with support from ARPA (now renamed DARPA to emphasize its "defense" focus), these networks began to harness the TCP/IP protocol to inter-operate. As this network scaled, DARPA looked for another agency to maintain it, given the limits of its advanced technology mission. While many US government agencies took their hand, the National Science Foundation had the widest group of scientific participants and their NSFNET quickly grew to be the largest network, leading ARPANET to be decommissioned in 1990. At the same time, NSFNET began to interconnect with networks in other wealthy countries.

One of those was the United Kingdom, where researcher Tim Berners-Lee in 1989 proposed a "web browser", "web server" and a Hypertext Mark-Up Language (HTML) that fully connected hypertext to packet-switching and made internet content far more available to a broad set of end users. From the launch of Berners-Lee's World Wide Web (WWW) in 1991, internet usage grew from roughly 4 million people (mostly in North America) to over 400 million (mostly around the world) by the end of the millennium. With internet start-ups booming in Silicon Valley and life for many beginning its migration online though the computers many now had in their home, the era of networked personal computing (of "The Computer as a Communication Device") had arrived.[20]

In the boom and bust euphoria of the turn of the millennium, few people in the tech world paid attention to the specter haunting the industry, the long-forgotten Ted Nelson. Stuck on his decades-long quest for the ideal networking and communication system, Nelson ceaselessly warned of the insecurity, exploitative structure and inhumane features of the emerging WWW design. Without secure identity systems (Xanadu Principles 1 and 3), a mixture of anarchy and land-grabs by nation states and corporate actors would be inevitable. Without embedded protocols for commerce (Xanadu Principles 9 and 15), online work would become devalued or the financial system controlled by monopolies. Without better structures for secure information sharing and control (Xanadu Principles 8 and 16), both surveillance and information siloing would be pervasive. Whatever its apparent success, the WWW-Internet was doomed to end badly.

While Nelson was something of an oddball, his concerns were surprisingly broadly shared among even the mainstream internet pioneers who would seem to have every reason to celebrate their success. As early as 1980, while TCP/IP was coalescing, Lick foresaw in his classic essay "Computers and Government" "two scenarios" (one good, the other bad) for the future of computing: it could be dominated and its potential stifled by monopolistic corporate control or there could be a full societal mobilization that made computing serve and support democracy.[21] In the former scenario, Lick projected all kinds of social ills, one that might make the advent of the information age a net detractor to democratic social flourishing. These included:

- Pervasive surveillance and public distrust of government.

- Paralysis of government's ability to regulate or enforce laws, as they fall behind the dominant technologies citizens use.

- Debasement of creative professions.

- Monopolization and corporate exploitation.

- Pervasive digital misinformation.

- Siloing of information that undermines much of the potential of networking.

- Government data and statistics becoming increasingly inaccurate and irrelevant.

- Control by private entities of the fundamental platforms for speech and public discourse.

The wider internet adoption spread, the the less relevant such complaints appeared. Government did not end up playing as central of a role as he imagined, but by 2000 most of the few commentators who were even aware of his warnings assumed we were surely on the path of Lick's scenario 2. Yet in a few places, concern was growing by late in the first decade of the new millennium. Virtual reality pioneer Jaron Lanier sounded the alarm in two books You are Not a Gadget and Who Owns The Future?, highlighting Nelson's and his own version of Lick's concerns about the future of the internet.[22] While these initially appeared simply an amplification of Nelson's fringe ideas, a series of world events that we discuss in the Information Technology and Democracy: a Widening Gulf above eventually brought much of the world around to seeing the limitations of the internet economy and society that had developed, helping ignite the Techlash. These patterns bore a striking resemblance to Lick and Nelson's warnings. The victory of the internet may have been far more Pyrrhic than it at first seemed.

Losing our dao

How did we fall into a trap clearly described by the founders of hypertext and the internet? After having led the development of the internet, why did government and the universities not rise to the challenge of the information age following the 1970s?

It was the warning signs that motivated Lick to put pen to paper in 1980 as the focus of ARPA (now DARPA) shifted away from support for networking protocols towards more directly weapons-oriented research. Lick saw this resulting from two forces on opposite ends of the political spectrum. On the one hand, with the rise of "small government conservatism" that would later be labeled "neoliberalism", government was retreating from proactively funding and shaping industry and technology. On the other hand, the Vietnam War turned much of the left against the role of the defense establishment in shaping research, leading to the Mansfield Amendments of 1970, 1971 and 1973 that prohibited ARPA from funding any research not directly related to the "defense function".[23] Together these were redirecting DARPA's focus to technologies like cryptography and artificial intelligence that were seen as directly supporting military objectives.

Yet even if the attention of the US government had not shifted, the internet was quickly growing out of its purview and control. As it became an increasingly global network, there was (as Dewey predicted) no clear public authority to make the investments needed to deal with the socio-technical challenges needed to make a network society a broader success. To quote Lick

From the point of view of computer technology itself, export...fosters computer research and development [but that] (f)rom the point of view of mankind...the important thing would...be a wise rather than a rapid...development...Such crucial issues as security, privacy, preparedness, participation, and brittleness must be properly resolved before one can conclude that computerization and programmation are good for the individual and society...Although I do not have total confidence in the ability of the United States to resolve those issues wisely, I think it is more likely than any other country to do so. That makes me doubt whether export of computer technology will do as much for mankind as a vigorous effort by the United States to figure out what kind of future it really wants and then to develop the technology needed to realize it.

The declining role of public and social sector investment left core functions/layers that leaders like Lick and Nelson saw for the internet (e.g. identity, privacy/security, asset sharing, commerce) to which we return below absent. While there were tremendous advances to come in both applications running on top of the internet and in the WWW, much of the fundamental investment in protocols was wrapping up by the time of Lick's writing. The role of the public and social sectors in defining and innovating the network of networks was soon eclipsed.

Into the resulting vacuum stepped the increasingly eager private sector, flush with the success of the personal computer and inflated by the stirring celebrations of Reagan and Thatcher. While the International Business Machines (IBM) that Lick feared would dominate and hamper the internet's development proved unable to key pace with technological change, it found many willing and able successors. A small group of telecommunications companies took over the internet backbone that the NSF freely relinquished. Web portals, like America Online and Prodigy came to dominate most Americans' interactions with the web, as Netscape and Microsoft vied to dominate web browsing. The neglected identity functions were filled by the rise of Google and Facebook. Absent digital payments were filled in by PayPal and Stripe. Absent the protocols for sharing data, computational power and storage that motivated work on the Intergalactic Computer Network in the first place, private infrastructures (often called "cloud providers") that empowered such sharing (such as Amazon Web Services and Microsoft Azure) became the platforms for building applications.[24]

While the internet backbone continued to improve in limited ways, adding security layers and some encryption, the basic features Lick and Nelson saw as essential were never integrated. Public financial support for the networking protocols largely dried up, with remaining open source development largely consisting of volunteer work or work supported by private corporations. As the world woke to the Age of the Internet, the dreams of its founders faded.

Flashbacks

Yet faded dreams have a stubborn persistence, nagging throughout a day. While Lick passed away in 1990, many of the early internet pioneers lived to see their triumph and tragedy.

Ted Nelson (shown in Figure C) and many other pioneers in Project Xanadu continue to carry their complaints about and reforms to the internet forward to this day. Engelbart, until his death in 2013, continued to speak, organize and write about his vision of "boosting Collective IQ". These activities included supporting, along with Terrence Winograd (PhD advisor to the Google founders), a community around Online Deliberation based at Stanford University that nurtured key leaders of the next generation of ⿻ as we will see below. While none of these efforts met with the direct successes of their earlier years, they played critical roles as inspiration and in some case even incubation for a new generation of ⿻ innovators, who have helped revive and articulate the dream of ⿻.

Nodes of light

While, as we highlighted in the introduction, the dominant thrust of technology has developed in directions that put it on a collision course with democracy, this new generation of leaders has formed a contrasting pattern, scattered but clearly discernible nodes of light that together give hope that with renewed common action, ⿻ could one day animate technology writ large. Perhaps the most vivid example for the average internet user is Wikipedia.

This open, non-profit collaborative project has become the leading global resource for reference and broadly shared factual information.[25] In contrast to the informational fragmentation and conflict that pervades much of the digital sphere that we highlighted in the introduction, Wikipedia has become a widely accepted source of shared understanding. It has done this through harnessing large-scale, open, collaborative self-governance.[26] Many aspects of this success are idiosyncratic and attempts to directly extend the model have had mixed success; trying to make such approaches more systematic and pervasive is much of our focus below. But the scale of the success is quite remarkable.[27] Recent analysis suggests that most web searches lead to results that prominently include Wikipedia entries. For all the celebration of the commercial internet, this one public, deliberative, participatory, and roughly consensual resource is perhaps its most common endpoint.

The concept of "Wiki," from which Wikipedia derives its name, comes from a Hawaiian word meaning "quick," and was coined by Ward Cunningham in 1995 when he created the first wiki software, WikiWikiWeb. Cunningham aimed to extend the web principles highlighted above of hypertextual navigation and inclusive ⿻ governance by allowing the rapid creation of linked databases.[28] Wikis invite all users, not just experts, to edit or create new pages using a standard web browser and to link them to one another, creating a dynamic and evolving web landscape in the spirit of ⿻.

While Wikis themselves have found significant applications, they have had an even broader impact in helping stimulate the "groupware" revolution that many internet users associate with products like Google docs but has its roots in the open source WebSocket protocol.[29] HackMD, a collaborative real-time Markdown editor, is used within the g0v community to collaboratively edit and openly share documents such as meeting minutes.[30] While collaboratively constructed documents illustrate this ethos, it more broadly pervades the very foundation of the online world itself. Open source software (OSS) embodies this ethos of participatory, networked, transnational self-governance. Significantly represented by the Linux operating system, OSS underlies the majority of public cloud infrastructures and connects with many through platforms like GitHub, boasting over 100 million contributors, growing rapidly in recent years especially in the developed world as pictured in Figure D. The Android OS, which powers over 70% of all smartphones, is an OSS project, despite being primarily maintained by Google. The success and impact of such "peer production" has forced the broad reconsideration of many assumptions underlying standard economic analysis.[31]

OSS emerged in reaction to the secretive and commercial direction of the software industry that emerged in the 1970s. The free and open development approach of the early days of ARPANET was sustained even after the withdrawal of public funding, thanks to a global volunteer workforce. Richard Stallman, opposing the closed nature of the Unix OS developed by AT&T, led the "free software movement", promoting the “GNU General Public License” that allowed users to run, study, share, and modify the source code. This was eventually rebranded as OSS, with a goal to replace Unix with an open-source alternative, Linux, led by Linus Torvalds.

OSS has expanded across various internet and computing sectors, even earning support from formerly hostile companies like Microsoft, now owner of leading OSS service company GitHub and employer of one of the authors of this book. This represents the practice of ⿻ on a large scale; emergent, collective co-creation of shared global resources. Communities form around shared interests, freely build on each other’s work, vet contributions through unpaid maintainers, and "fork" projects into parallel versions in case of irreconcilable differences. The protocol “git” supports collaborative tracking of changes, with platforms like GitHub and GitLab facilitating millions of developers' participation. This book is a product of such collaboration and has been supported by Microsoft and GitHub.

However, OSS faces challenges such as chronic financial support shortage due to the withdrawal of public funding, as explored by Nadia Eghbal (now Asparouhova) in her book Working in Public. Maintainers are often unrewarded and the community's growth increases the burden on them. Nonetheless, these challenges are addressable, and OSS, despite its business model limitations, exemplifies the continuance of the open collaboration ethos (the lost dao) that ⿻ aims to support. Hence, OSS projects will be frequent examples in this book.

Another contrasting reaction to the shift away from public investment in communication networking was exemplified by the work of Lanier from above. A student and critic of AI pioneer Marvin Minsky, he sought to develop a technological program of the same ambition as AI, but centered around human experience and communication. Seeing existing forms of communication as being constrained by symbols that can be processed by the ears and eyes like words and pictures, he aspired to empower deeper sharing of and empathy for experiences only expressible by sense like touch and proprioception (the internal sense). Through his research and entrepreneurship during the 1980s, this developed into the field of "virtual reality", one that has been a continual source of innovation in user interaction since, from the wired glove[35] to Apple's release of the Vision Pro [36].

Yet, as we highlighted above, Lanier carried forward not only the cultural vision of the computer as a communication device; he also championed Nelson's critique of the gaps and failings of what became the internet. He particularly emphasized the lack of base layer protocols supporting payments, secure data sharing and provenance and financial support for OSS. This advocacy combined with the emergence of (pseudonymous) Satoshi Nakamoto's invention of the Bitcoin protocol in 2008 to inspire a wave of work on these topics in and around "web3" communities that harnesses cryptography and blockchains to create shared understanding of provenance and value.[37] While many projects in the space have been influenced by Libertarianism and hyper-financialization, the enduring connection to original aspirations of the internet, especially under the leadership of Vitalik Buterin (who founded Ethereum, the largest smart contract platform), has inspired a number of projects, like GitCoin and decentralized identity, that are central inspirations for ⿻ today as we explore below.

Other pioneers on these issues focused more on layers of communication and association, rather than provenance and value. Calling their work the "Decentralized Web" or the "Fediverse", they built protocols like Christine Lemmer Webber's Activity Pub that became the basis for non-commercial, community based alternatives to mainstream social media, ranging from Mastodon to Twitter's now-independent and non-profit BlueSky initiative. This space has also produced many of the most creative ideas for re-imagining identity and privacy with a foundation in social and community relationships.

Finally and perhaps most closely connected to our own paths to ⿻ have been the movements to revive the public and multisectoral spirit and ideals of the early internet by strengthening the digital participation of governments and democratic civil society. These "GovTech" and "Civic Tech" movements have harnessed OSS-style development practices to improve the delivery of government services and bring the public into the process in a more diverse range of ways. Leaders in the US include Jennifer Pahlka, founder of GovTech pioneer Code4America, and Beth Simone Noveck, Founder of The GovLab.[38]

Noveck, in particular, is a powerful bridge between the early development of ⿻ and its future, having been a driving force behind the Online Deliberation workshops mentioned above, having developed Unchat, one of the earliest attempts at software to serve these goals and which helped inspire the work of vTaiwan and more.[39] She went on to pioneer, in her work with the US Patent and Trademark Office and later as Deputy Chief Technology Officer of the US many of the transparent and inclusive practices that formed the core of the g0v movement we highlighted above.[40] Noveck was a critical mentor not just to g0v but to a range of other ambitious civic technology projects around the world from the Kenya collective crisis reporting platform Ushahidi founded by Juliana Rotich and collaborators to a variety of European participative policy-making platforms like Decidim founded by Francesca Bria and collaborators and CONSUL that arose from the "Indignado" movement parallel to g0v in Spain, on the board of which one of us sits. Yet despite these important impacts, a variety of features of these settings has made it challenging for these examples to have the systemic, national and thus easily traceable macrolevel impacts that g0v had in Taiwan.

Other countries have, of course, excelled in various elements of ⿻. Estonia is perhaps the leading example and shares with Taiwan a strong history of Georgism and land taxes, is often cited as the most digitized democratic government in the world and pioneered digital democracy earlier than almost any other country, starting in the late 1990s.[41] Finland has built on and scaled the success of its neighbor, extending digital inclusion deeper into society, educational system and the economy than Estonia, as well as adopting elements of digitized democratic participation. Singapore has the most ambitious Georgist-style policies on earth and harnesses more creative ⿻ economic mechanisms and fundamental protocols than any other jurisdiction. South Korea has invested extensively in both digital services and digital competence education. New Zealand has pioneered internet-based voting and harnessed civil society to improve public service inclusion. Iceland has harnessed digital tools to extend democratic participation more extensively than any other jurisdiction. Kenya, Brazil and especially India have pioneered digital infrastructure for development. We will return to many of these examples in what follows.

Yet none of these have institutionalized the breadth and depth of ⿻ approaches to socio-technical organization across sectors that Taiwan has. It is thus more challenging to take these cases as broad national examples on which to found imagination of what ⿻ could mean to the world if it could scale up to bridge the divides of nation, culture and sector and forming both the infrastructural foundation and the mission of global digital society. With that anchoring example and additional hope from these other cases, we now turn to painting in greater depth the opportunity a ⿻ global future holds.

J.C.R. Licklider, "Computers and Government" in Michael L. Dertouzos and Joel Moses eds., The Computer Age: A Twenty-Year View (Cambridge, MA: MIT Press, 1980) ↩︎

Fred Turner, The Democratic Surround: Multimedia and American Liberalism from World War II to the Psychedelic Sixties (Chicago: University of Chicago Press, 2013). ↩︎

While we do not have space to pursue Deming's or Mead's stories in anything like the depth we do the development of the internet, in many ways the work of these two pioneers parallels many of the themes we develop and in the industiral and cultural spheres laid the groundwork for ⿻ just as Licklider and his disciples did in computation. UTHSC. “Deming’s 14 Points,” May 26, 2022. https://www.uthsc.edu/its/business-productivity-solutions/lean-uthsc/deming.php. ↩︎

Dan Davies, The Unaccountability Machine: Why Big Systems Make Terrible Decisions - and How The World Lost its Mind (London: Profile Books, 2024). ↩︎

M. Mitchell Waldrop, The Dream Machine (New York: Penguin, 2002). ↩︎

Katie Hafner and Matthew Lyon, Where the Wizards Stay up Late: The Origins of the Internet (New York: Simon & Schuster, 1998). ↩︎

Dickson, Paul. “Sputnik’s Impact on America.” NOVA | PBS, November 6, 2007. https://www.pbs.org/wgbh/nova/article/sputnik-impact-on-america/. ↩︎

J. C. R. Licklider. “Man-Computer Symbiosis,” March 1960. https://groups.csail.mit.edu/medg/people/psz/Licklider.html. ↩︎

“Douglas Engelbart Issues ‘Augmenting Human Intellect: A Conceptual Framework’ : History of Information,” October 1962. https://www.historyofinformation.com/detail.php?id=801. ↩︎

J.C.R. Licklider, "Memorandum For: Members and Affiliates of the Intergalactic Computer Network", 1963 available at https://worrydream.com/refs/Licklider_1963_-_Members_and_Affiliates_of_the_Intergalactic_Computer_Network.pdf. ↩︎

Engelbart, Christina. “Firsts: The Demo - Doug Engelbart Institute.” Doug Engelbart Institute, n.d. https://dougengelbart.org/content/view/209/. ↩︎

https://www.usnews.com/best-colleges/rankings/computer-science-overall ↩︎

J.C.R. Licklider and Robert Taylor, "The Computer as a Communication Device" Science and Technology 76, no. 2 (1967): 1-3. ↩︎

Michael A. Hiltzik, Dealers of Lightning: Xerox PARC and the Dawn of the Computer Age (New York: Harper Business, 2000). ↩︎

Paul Baran, "On Distributed Communications Networks," IEEE Transactions on Communications Systems 12, no. 1 (1964): 1-9. ↩︎

Theodor Holm Nelson, Literary Machines (Self-published, 1981), available at https://cs.brown.edu/people/nmeyrowi/LiteraryMachinesChapter2.pdf ↩︎

"Choose Your Own Adventure," interactive gamebooks based on Edward Packard's concept from 1976, peaked in popularity under Bantam Books in the '80s and '90s, with 250+ million copies sold. It declined in the '90s due to competition from computer games. ↩︎

Mailland and Driscoll, op. cit. ↩︎

World Bank, "World Development Indicators" December 20, 2023 at https://datacatalog.worldbank.org/search/dataset/0037712/World-Development-Indicators. ↩︎

Licklider and Taylor, op. cit. ↩︎

Licklider, "Comptuers and Government", op. cit. ↩︎

Jaron Lanier, You Are Not a Gadget: A Manifesto (New York: Vintage, 2011) and Who Owns the Future? (New York: Simon & Schuster, 2014). ↩︎

Phil Williams, "Whatever Happened to the Mansfield Amendment?" Survival: Global Politics and Strategy 18, no. 4 (1976): 146-153 and "The Mansfield Amendment of 1971" in The Senate and US Troops in Europe (London, Palgrave Macmillan: 1985): pp. 169-204. ↩︎

Ben Tarnoff, Internet for the People: The Fight for Our Digital Future (New York: Verso, 2022). ↩︎

In fact, researchers have studied reading patterns in terms of time spent by users across the globe. Nathan TeBlunthuis, Tilman Bayer, and Olga Vasileva, “Dwelling on Wikipedia,” Proceedings of the 15th International Symposium on Open Collaboration, August 20, 2019, https://doi.org/10.1145/3306446.3340829, (pp. 1-14). ↩︎

Sohyeon Hwang, and Aaron Shaw. “Rules and Rule-Making in the Five Largest Wikipedias.” Proceedings of the International AAAI Conference on Web and Social Media 16 (May 31, 2022): 347–57, https://doi.org/10.1609/icwsm.v16i1.19297 studied rule-making on Wikipedia using 20 years of trace data. ↩︎

In an experiment, McMahon and colleagues found that a search engine with Wikipedia links increased relative click-through-rate (a key search metric) by 80% compared to a search engine without Wikipedia links. Connor McMahon, Isaac Johnson, and Brent Hecht, “The Substantial Interdependence of Wikipedia and Google: A Case Study on the Relationship between Peer Production Communities and Information Technologies,” Proceedings of the International AAAI Conference on Web and Social Media 11, no. 1 (May 3, 2017): 142–51, https://doi.org/10.1609/icwsm.v11i1.14883. Motivated by this work, an audit study found that Wikipedia appears in roughly 70 to 80% of all search results pages for "common" and "trending" queries. Nicholas Vincent, and Brent Hecht, “A Deeper Investigation of the Importance of Wikipedia Links to Search Engine Results,” Proceedings of the ACM on Human-Computer Interaction 5, no. CSCW1 (April 13, 2021): 1–15, https://doi.org/10.1145/3449078. ↩︎

Bo Leuf and Ward Cunningham, The Wiki Way: Quick Collaboration on the Web (Boston: Addison-Wesley, 2001). ↩︎

The term "groupware" was coined by Peter and Trudy Johnson-Lenz in 1978, with early commercial products appearing in the 1990s, such as Lotus Notes, enabling remote group collaboration. Google Docs, originated from Writely launched in 2005, has widely popularized the concept of collaborative real-time editing. ↩︎

Scrapbox, a combination of real-time editor with a wiki system, is utilized by the Japanese forum of this book. Visitors of the forum can read the drafts and add questions, explanations, or links to related topics in real time. This interactive environment supports activities like book reading events, where participants can write questions, engage in oral discussions, or take minutes of these discussions. The feature to rename keywords while maintaining the network structure helps the unification of variations in terminology and provides a process to find the good translation. As more people read through, a network of knowledge is nurtured to aid the understanding of subsequent readers. ↩︎

Yochai Benkler, “Coase’s Penguin, Or, Linux and the Nature of the Firm,” n.d. http://www.benkler.org/CoasesPenguin.PDF. ↩︎

GitHub Innovation graph at https://github.com/github/innovationgraph/ ↩︎

World Bank, "Population ages 15-64, total" at https://data.worldbank.org/indicator/SP.POP.1564.TO. ↩︎

Department of Household Registration, Ministry of the Interior, "Household Registration Statistics in January 2024" at https://www.ris.gov.tw/app/en/2121?sn=24038775. ↩︎

A wired glove is an input device like a glove. It allows users to interact with digital environments through gestures and movements, translating physical hand actions into digital responses. Jaron Lanier, Dawn of the New Everything: Encounters with Reality and Virtual Reality (New York: Henry Holt and Co., 2017). ↩︎

The Vision Pro is a head mount display, released by Apple in 2024. This device integrates high-resolution displays with sensors capable of tracking the user's movements, hand actions and the environment to offer an immersive mixed reality experience. ↩︎

Satoshi Nakamoto, "Bitcoin: A Peer-to-Peer Electronic Cash System" at https://assets.pubpub.org/d8wct41f/31611263538139.pdf. ↩︎

Jennifer Pahlka, Recoding America: Why Government is Failing in the Digital Age and How We Can Do Better (New York: Macmillan, 2023). Beth Simone Noveck, Wiki Government: How Technology Can Make Government Better, Democracy Stronger, and Citizens More Powerful (New York: Brookings Institution Press, 2010). ↩︎

Beth Noveck, “Designing Deliberative Democracy in Cyberspace: The Role of the Cyber-Lawyer,” New York Law School, n.d. https://digitalcommons.nyls.edu/cgi/viewcontent.cgi?article=1580&context=fac_articles_chapters; Beth Noveck, “A Democracy of Groups,” First Monday 10, no. 11 (November 7, 2005), https://doi.org/10.5210/fm.v10i11.1289. ↩︎

Beth Simone Noveck, Wiki Government op. cit.; Vivek Kundra, and Beth Noveck, “Open Government Initiative,” Internet Archive, June 3, 2009, https://web.archive.org/web/20090603192345/http://www.whitehouse.gov/open/. ↩︎

Gary Anthes, "Estonia: a Model for e-Government" Communications of the ACM 58, no. 6 (2015): 18-20. ↩︎